2024 |

|

| Bayesian Strategy Networks Based Soft Actor-Critic Learning Journal Article ACM Transactions on Intelligent Systems and Technology, 15 (3), pp. 1–24, 2024. Abstract | Links | BibTeX | Tags: control, learning @article{Yang2024b, title = {Bayesian Strategy Networks Based Soft Actor-Critic Learning}, author = {Qin Yang and Ramviyas Parasuraman}, url = {https://dl.acm.org/doi/10.1145/3643862}, doi = {10.1145/3643862}, year = {2024}, date = {2024-03-29}, journal = {ACM Transactions on Intelligent Systems and Technology}, volume = {15}, number = {3}, pages = {1–24}, abstract = {A strategy refers to the rules that the agent chooses the available actions to achieve goals. Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system’s utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel hierarchical strategy decomposition approach based on Bayesian chaining to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method – soft actor-critic (SAC), and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. Our method achieves the state-of-the-art performance on the standard continuous control benchmarks in the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency. Furthermore, we extend the topic to the Multi-Agent systems (MAS), discussing the potential research fields and directions.}, keywords = {control, learning}, pubstate = {published}, tppubtype = {article} } A strategy refers to the rules that the agent chooses the available actions to achieve goals. Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system’s utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel hierarchical strategy decomposition approach based on Bayesian chaining to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method – soft actor-critic (SAC), and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. Our method achieves the state-of-the-art performance on the standard continuous control benchmarks in the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency. Furthermore, we extend the topic to the Multi-Agent systems (MAS), discussing the potential research fields and directions. |

| Communication-Efficient Multi-Robot Exploration Using Coverage-biased Distributed Q-Learning Journal Article IEEE Robotics and Automation Letters, 9 (3), pp. 2622 - 2629, 2024. Abstract | Links | BibTeX | Tags: cooperation, learning, mapping, multi-robot, networking @article{Latif2024b, title = {Communication-Efficient Multi-Robot Exploration Using Coverage-biased Distributed Q-Learning}, author = {Ehsan Latif and Ramviyas Parasuraman}, url = {https://ieeexplore.ieee.org/document/10413563}, doi = {10.1109/LRA.2024.3358095}, year = {2024}, date = {2024-03-01}, journal = {IEEE Robotics and Automation Letters}, volume = {9}, number = {3}, pages = {2622 - 2629}, abstract = {Frontier exploration and reinforcement learning have historically been used to solve the problem of enabling many mobile robots to autonomously and cooperatively explore complex surroundings. These methods need to keep an internal global map for navigation, but they do not take into consideration the high costs of communication and information sharing between robots. This study offers CQLite, a novel distributed Q-learning technique designed to minimize data communication overhead between robots while achieving rapid convergence and thorough coverage in multi-robot exploration. The proposed CQLite method uses ad hoc map merging, and selectively shares updated Q-values at recently identified frontiers to significantly reduce communication costs. The theoretical analysis of CQLite's convergence and efficiency, together with extensive numerical verification on simulated indoor maps utilizing several robots, demonstrates the method's novelty. With over 2x reductions in computation and communication alongside improved mapping performance, CQLite outperformed cutting-edge multi-robot exploration techniques like Rapidly Exploring Random Trees and Deep Reinforcement Learning. }, keywords = {cooperation, learning, mapping, multi-robot, networking}, pubstate = {published}, tppubtype = {article} } Frontier exploration and reinforcement learning have historically been used to solve the problem of enabling many mobile robots to autonomously and cooperatively explore complex surroundings. These methods need to keep an internal global map for navigation, but they do not take into consideration the high costs of communication and information sharing between robots. This study offers CQLite, a novel distributed Q-learning technique designed to minimize data communication overhead between robots while achieving rapid convergence and thorough coverage in multi-robot exploration. The proposed CQLite method uses ad hoc map merging, and selectively shares updated Q-values at recently identified frontiers to significantly reduce communication costs. The theoretical analysis of CQLite's convergence and efficiency, together with extensive numerical verification on simulated indoor maps utilizing several robots, demonstrates the method's novelty. With over 2x reductions in computation and communication alongside improved mapping performance, CQLite outperformed cutting-edge multi-robot exploration techniques like Rapidly Exploring Random Trees and Deep Reinforcement Learning. |

| 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024), 2024. Abstract | Links | BibTeX | Tags: learning, mapping, perception @conference{Ravipati2024, title = {Object-Oriented Material Classification and 3D Clustering for Improved Semantic Perception and Mapping in Mobile Robots}, author = {Siva Krishna Ravipati and Ehsan Latif and Suchendra Bhandarkar and Ramviyas Parasuraman }, url = {https://ieeexplore.ieee.org/document/10801936}, doi = {10.1109/IROS58592.2024.10801936}, year = {2024}, date = {2024-10-13}, booktitle = {2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024)}, pages = {9729-9736}, abstract = {Classification of different object surface material types can play a significant role in the decision-making algorithms for mobile robots and autonomous vehicles. RGB-based scene-level semantic segmentation has been well-addressed in the literature. However, improving material recognition using the depth modality and its integration with SLAM algorithms for 3D semantic mapping could unlock new potential benefits in the robotics perception pipeline. To this end, we propose a complementarity-aware deep learning approach for RGB-D-based material classification built on top of an object-oriented pipeline. The approach further integrates the ORB-SLAM2 method for 3D scene mapping with multiscale clustering of the detected material semantics in the point cloud map generated by the visual SLAM algorithm. Extensive experimental results with existing public datasets and newly contributed real-world robot datasets demonstrate a significant improvement in material classification and 3D clustering accuracy compared to state-of-the-art approaches for 3D semantic scene mapping. }, keywords = {learning, mapping, perception}, pubstate = {published}, tppubtype = {conference} } Classification of different object surface material types can play a significant role in the decision-making algorithms for mobile robots and autonomous vehicles. RGB-based scene-level semantic segmentation has been well-addressed in the literature. However, improving material recognition using the depth modality and its integration with SLAM algorithms for 3D semantic mapping could unlock new potential benefits in the robotics perception pipeline. To this end, we propose a complementarity-aware deep learning approach for RGB-D-based material classification built on top of an object-oriented pipeline. The approach further integrates the ORB-SLAM2 method for 3D scene mapping with multiscale clustering of the detected material semantics in the point cloud map generated by the visual SLAM algorithm. Extensive experimental results with existing public datasets and newly contributed real-world robot datasets demonstrate a significant improvement in material classification and 3D clustering accuracy compared to state-of-the-art approaches for 3D semantic scene mapping. |

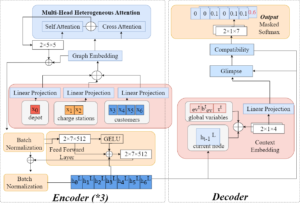

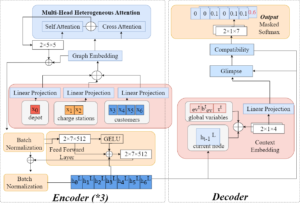

| Route Planning for Electric Vehicles with Charging Constraints Conference 2024 IEEE 100th Vehicular Technology Conference (VTC2024-Fall), 2024. Abstract | Links | BibTeX | Tags: control, learning, multi-robot systems @conference{Munir2024c, title = {Route Planning for Electric Vehicles with Charging Constraints}, author = {Aiman Munir, Ramviyas Parasuraman, Jin Ye, WenZhan Song}, url = {https://ieeexplore.ieee.org/abstract/document/10757558}, doi = {10.1109/VTC2024-Fall63153.2024.10757558}, year = {2024}, date = {2024-10-10}, booktitle = {2024 IEEE 100th Vehicular Technology Conference (VTC2024-Fall)}, pages = {2577-2465}, abstract = {Recent studies demonstrate the efficacy of machine learning algorithms for learning strategies to solve combinatorial optimization problems. This study presents a novel solution to address the Electric Vehicle Routing Problem with Time Windows (EVRPTW), leveraging deep reinforcement learning (DRL) techniques. Existing DRL approaches frequently encounter challenges when addressing the EVRPTW problem: RNN-based decoders struggle with capturing long-term dependencies, while DDQN models exhibit limited generalization across various problem sizes. To overcome these limitations, we introduce a transformer-based model with a heterogeneous attention mechanism. Transformers excel at capturing long-term dependencies and demonstrate superior generalization across diverse problem instances. We validate the efficacy of our proposed approach through comparative analysis against two state-of-the-art solutions for EVRPTW. The results demonstrated the efficacy of the proposed model in minimizing the distance traveled and robust generalization across varying problem sizes. }, keywords = {control, learning, multi-robot systems}, pubstate = {published}, tppubtype = {conference} } Recent studies demonstrate the efficacy of machine learning algorithms for learning strategies to solve combinatorial optimization problems. This study presents a novel solution to address the Electric Vehicle Routing Problem with Time Windows (EVRPTW), leveraging deep reinforcement learning (DRL) techniques. Existing DRL approaches frequently encounter challenges when addressing the EVRPTW problem: RNN-based decoders struggle with capturing long-term dependencies, while DDQN models exhibit limited generalization across various problem sizes. To overcome these limitations, we introduce a transformer-based model with a heterogeneous attention mechanism. Transformers excel at capturing long-term dependencies and demonstrate superior generalization across diverse problem instances. We validate the efficacy of our proposed approach through comparative analysis against two state-of-the-art solutions for EVRPTW. The results demonstrated the efficacy of the proposed model in minimizing the distance traveled and robust generalization across varying problem sizes. |

| Bayesian Soft Actor-Critic: A Directed Acyclic Strategy Graph Based Deep Reinforcement Learning Conference 2024 ACM/SIGAPP Symposium on Applied Computing (SAC) , IRMAS Track 2024. Abstract | Links | BibTeX | Tags: control, learning @conference{Yang2024, title = {Bayesian Soft Actor-Critic: A Directed Acyclic Strategy Graph Based Deep Reinforcement Learning}, author = {Qin Yang and Ramviyas Parasuraman}, url = {https://dl.acm.org/doi/10.1145/3605098.3636113}, doi = {10.1145/3605098.3636113}, year = {2024}, date = {2024-04-08}, booktitle = {2024 ACM/SIGAPP Symposium on Applied Computing (SAC) }, series = {IRMAS Track}, abstract = {Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system's utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel directed acyclic strategy graph decomposition approach based on Bayesian chaining to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method -- soft actor-critic (SAC), and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. We compare our method against the state-of-the-art deep reinforcement learning algorithms on the standard continuous control benchmarks in the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency. }, keywords = {control, learning}, pubstate = {published}, tppubtype = {conference} } Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system's utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel directed acyclic strategy graph decomposition approach based on Bayesian chaining to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method -- soft actor-critic (SAC), and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. We compare our method against the state-of-the-art deep reinforcement learning algorithms on the standard continuous control benchmarks in the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency. |

2023 |

|

| A Strategy-Oriented Bayesian Soft Actor-Critic Model Conference Procedia Computer Science, 220 , ANT 2023 Elsevier, 2023. Abstract | Links | BibTeX | Tags: autonomy, learning @conference{Yang2023b, title = {A Strategy-Oriented Bayesian Soft Actor-Critic Model}, author = {Qin Yang and Ramviyas Parasuraman}, url = {https://www.sciencedirect.com/science/article/pii/S1877050923006063}, doi = {10.1016/j.procs.2023.03.071}, year = {2023}, date = {2023-03-17}, booktitle = {Procedia Computer Science}, journal = {Procedia Computer Science}, volume = {220}, pages = {561-566}, publisher = {Elsevier}, series = {ANT 2023}, abstract = {Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system's utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel hierarchical strategy decomposition approach based on the Bayesian chain rule to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method – soft actor-critic (SAC) and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. We compare the proposed BSAC method with the SAC and other state-of-the-art approaches such as TD3, DDPG, and PPO on the standard continuous control benchmarks – Hopper-v2, Walker2d-v2, and Humanoid-v2 – in MuJoCo with the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency.}, keywords = {autonomy, learning}, pubstate = {published}, tppubtype = {conference} } Adopting reasonable strategies is challenging but crucial for an intelligent agent with limited resources working in hazardous, unstructured, and dynamic environments to improve the system's utility, decrease the overall cost, and increase mission success probability. This paper proposes a novel hierarchical strategy decomposition approach based on the Bayesian chain rule to separate an intricate policy into several simple sub-policies and organize their relationships as Bayesian strategy networks (BSN). We integrate this approach into the state-of-the-art DRL method – soft actor-critic (SAC) and build the corresponding Bayesian soft actor-critic (BSAC) model by organizing several sub-policies as a joint policy. We compare the proposed BSAC method with the SAC and other state-of-the-art approaches such as TD3, DDPG, and PPO on the standard continuous control benchmarks – Hopper-v2, Walker2d-v2, and Humanoid-v2 – in MuJoCo with the OpenAI Gym environment. The results demonstrate that the promising potential of the BSAC method significantly improves training efficiency. |

Publications

2024 |

|

| Bayesian Strategy Networks Based Soft Actor-Critic Learning Journal Article ACM Transactions on Intelligent Systems and Technology, 15 (3), pp. 1–24, 2024. |

| Communication-Efficient Multi-Robot Exploration Using Coverage-biased Distributed Q-Learning Journal Article IEEE Robotics and Automation Letters, 9 (3), pp. 2622 - 2629, 2024. |

| 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024), 2024. |

| Route Planning for Electric Vehicles with Charging Constraints Conference 2024 IEEE 100th Vehicular Technology Conference (VTC2024-Fall), 2024. |

| Bayesian Soft Actor-Critic: A Directed Acyclic Strategy Graph Based Deep Reinforcement Learning Conference 2024 ACM/SIGAPP Symposium on Applied Computing (SAC) , IRMAS Track 2024. |

2023 |

|

| A Strategy-Oriented Bayesian Soft Actor-Critic Model Conference Procedia Computer Science, 220 , ANT 2023 Elsevier, 2023. |